Tracking API

The Spectacular AI tracking API (a.k.a. “VIO” API) consists of the common output types relevant for real-time 6-degree-of-freedom pose tracking.

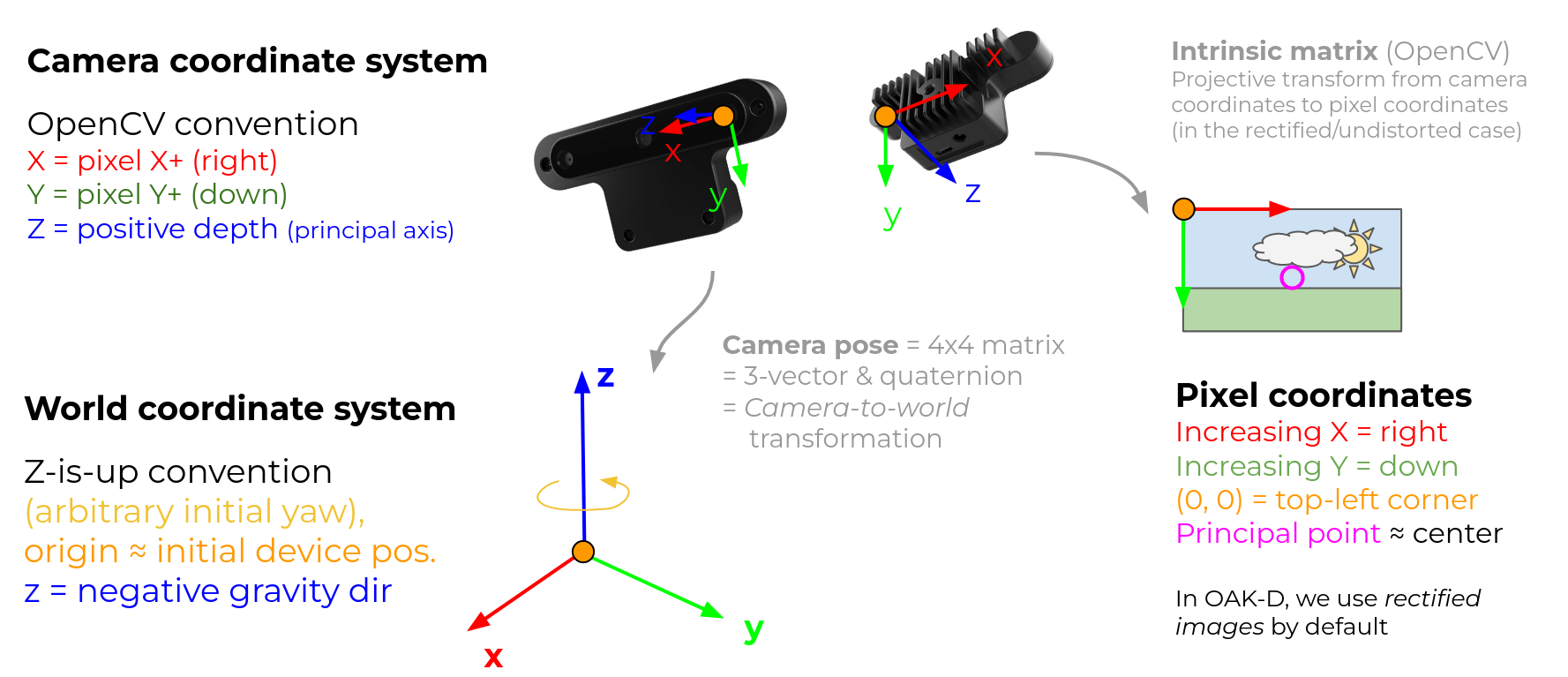

Coordinate systems

The SDK uses the following coordinate conventions, which are also elaborated in the diagram below

World coordinate system: Right-handed Z-is-up

Camera coordinate system: OpenCV convention (see here) for a nice illustration), which is also right-handed

These conventions are different from, e.g., Intel RealSense SDK (cf. here)), ARCore, Unity and most OpenGL tutorials, most of which use an “Y-is-up” coordinate system, often different camera coordinates systems, and sometimes different pixel (or “NDC”) coordinate conventions.

By default, the spectacularAI.Pose object returned by the Spectacular AI SDK uses the left camera as the local reference frame.

To get the pose for another camera, use either spectacularAI.VioOutput.getCameraPose()

(OR spectacularAI.depthai.Session.getRgbCameraPose() on OAK-Ds)

.

Tracking status

The state of the SDK is determined by spectacularAI.VioOutput.status.

If the SDK is operating normally, the status is TRACKING.

If the status turns into LOST_TRACKING, this means that the algorithm has degraded into a state where it has to be re-initialized.

The pose information int the VioOutput with a LOST_TRACKING is not reliable and must not be used. For example in an AR/VR headset,

a “lost tracking” message can be displayed to the user.

6-DoF pose output

Note: Do not store the VioOutput or GnssVioOutput classes to internal data structures for extended periods of time. These objects are meant to be processed by the user shortly after begin emitted by the SDK. Storing them for longer periods of time leads to the depletion of the SDK’s internal output buffers, which can cause it to terminate with a fatal error.

- class spectacularAI.Camera(self: spectacularAI.Camera, arg0: List[List[float[3]][3]], arg1: int, arg2: int)

Represents the intrinsic parameters of a particular camera. If the input image is distorted, the camera and projection matrices correspond to the undistorted / rectified image.

Build a pinhole camera

- getIntrinsicMatrix(self: spectacularAI.Camera) numpy.ndarray

3x3 intrinsic camera matrix (OpenCV convention, undistorted).

- getProjectionMatrixOpenGL(self: spectacularAI.Camera, arg0: float, arg1: float) numpy.ndarray

4x4 projection matrix for OpenGL (undistorted)

- pixelToRay(self: spectacularAI.Camera, arg0: spectacularAI.PixelCoordinates) object

Convert pixel coordinates to camera coordinates

- Parameters:

arg0

PixelCoordinates: pixel coordinates.- Returns:

Vector3dray in camera coordinates on succesful conversion. None otherwise.

- rayToPixel(self: spectacularAI.Camera, arg0: spectacularAI.Vector3d) object

Convert camera coordinates to pixel coordinates

- Parameters:

arg0

Vector3d: ray in camera coordinates.- Returns:

PixelCoordinatespixel coordinates on succesful conversion (note that the pixel can be outside image boundaries). None otherwise.

- class spectacularAI.CameraPose

Represents the pose (position & orientation) and other parameters of a particular camera.

- getCameraToWorldMatrix(self: spectacularAI.CameraPose) numpy.ndarray

4x4 homogeneous camera-to-world matrix

- getPosition(self: spectacularAI.CameraPose) spectacularAI.Vector3d

Vector3dposition of the camera

- getWorldToCameraMatrix(self: spectacularAI.CameraPose) numpy.ndarray

4x4 homogeneous world-to-camera matrix

- pixelToWorld(self: spectacularAI.CameraPose, arg0: spectacularAI.PixelCoordinates) object

Convert pixel coordinates to rays in world coordinates

- Parameters:

arg0

PixelCoordinates: pixel coordinates to convert.- Returns:

A tuple with

Vector3dorigin of the ray in world coordinates,Vector3ddirection of ray from the origin on succesful conversion. None otherwise.

- worldToPixel(self: spectacularAI.CameraPose, arg0: spectacularAI.Vector3d) object

Convert world coordinates to pixel coordinates

- Parameters:

arg0

Vector3d: point in world coordinates coordinates.- Returns:

PixelCoordinatespixel coordinates on succesful conversion (note that the pixel can be outside image boundaries). None otherwise.

- class spectacularAI.VioOutput

Main output structure

- asJson(self: spectacularAI.VioOutput) str

a JSON representation of this object

- getCameraPose(self: spectacularAI.VioOutput, arg0: int) spectacularAI.CameraPose

CameraPosecorresponding to a camera whose index is given as the parameter. Index 0 corresponds to the primary camera and index 1 the secondary camera.

- property globalPose

GnssVioOutputGlobal pose, only returned if GNSS information is provided viaSession.addGnss(…)

- property positionCovariance

position uncertainty, 3x3 covariance matrix

- property status

current

TrackingStatus

- property tag

input tag from

addTrigger. Set to 0 for other outputs.

- property velocityCovariance

velocity uncertainty, 3x3 covariance matrix

- class spectacularAI.GnssVioOutput

GNSS-VIO output

- property coordinates

current

WgsCoordinates

- property enuPositionCovariance

enu position uncertainty, 3x3 covariance matrix

- getEnuCameraPose(self: spectacularAI.GnssVioOutput, arg0: int, arg1: spectacularAI.WgsCoordinates) spectacularAI::CameraPose

Get the global pose of a particular camera. The “world” coordinate system of the camera pose is an East-North-Up system, whose origin is at the given WGS84 coordinates.

- property orientation

current

Quaternion

- property status

current

GnssVioOutputStatus

- property velocityCovariance

velocity uncertainty, 3x3 covariance matrix

Defined in #include <spectacularAI/output.hpp>

-

namespace spectacularAI

Typedefs

-

using VioOutputPtr = std::shared_ptr<const VioOutput>

-

struct Camera

Interface for accessing the geometric camera properties of a calibrated camera.

Public Functions

-

virtual bool pixelToRay(const PixelCoordinates &pixel, Vector3d &ray) const = 0

Convert pixel coordinates to camera coordinates

- Parameters:

pixel –

ray –

- Returns:

true if conversion succeeded

-

virtual bool rayToPixel(const Vector3d &ray, PixelCoordinates &pixel) const = 0

Convert camera coordinates to pixel coordinates

- Parameters:

ray –

pixel –

- Returns:

true if conversion succeeded

-

virtual Matrix3d getIntrinsicMatrix() const = 0

Defined as the rectified intrinsic matrix if there’s distortion.

- Returns:

OpenCV convention

\[\begin{split} \left[ \begin{matrix} f_x & 0 & c_x \\ 0 & f_y & c_y \\ 0 & 0 & 1 \end{matrix} \right] \end{split}\]

-

virtual Matrix4d getProjectionMatrixOpenGL(double nearClip, double farClip) const = 0

Project from camera coordinates to normalized device coordinates (NDC).

- Parameters:

nearClip –

farClip –

-

virtual ~Camera()

-

virtual bool pixelToRay(const PixelCoordinates &pixel, Vector3d &ray) const = 0

-

struct CameraPose

Pose of camera and conversions between world, camera, and pixel coordinates.

Public Functions

-

Matrix4d getWorldToCameraMatrix() const

Matrix that converts homogeneous world coordinates to homogeneous camera coordinates

-

Matrix4d getCameraToWorldMatrix() const

Matrix that converts homogeneous camera coordinates to homogeneous world coordinates

-

Vector3d getPosition() const

Position in world coordinates

-

bool pixelToWorld(const PixelCoordinates &pixel, Vector3d &origin, Vector3d &ray) const

Convert pixel coordinates to rays in world coordinates.

- Parameters:

pixel –

origin – current camera position in world coordinates

ray – direction of ray from the origin

- Returns:

true if conversion succeeded

-

bool worldToPixel(const Vector3d &point, PixelCoordinates &pixel) const

Convert world coordinates to pixel coordinates

- Parameters:

point –

pixel –

- Returns:

true if conversion succeeded

-

Matrix4d getWorldToCameraMatrix() const

-

struct VioOutput

Main output structure.

These objects are meant to be processed soon after they are emitted and they must not be stored in custom data structures for an extended periods of time. Storing these objects for too long will exhaust the SDK’s output buffers, and causes it to drop outputs or eventually terminate with a fatal error.

Public Functions

-

virtual CameraPose getCameraPose(int cameraId) const = 0

Current pose in camera coordinates

- Parameters:

cameraId – 0 for primary, 1 for secondary camera

-

virtual std::string asJson() const

Returns the output to a JSON string if supported. Otherwise returns an empty string.

-

virtual ~VioOutput()

Public Members

-

TrackingStatus status

Current tracking status

-

Pose pose

The current pose, with the timestamp in the clock used for input sensor data and camera frames.

-

Vector3d velocity

Velocity vector (xyz) in m/s in the coordinate system used by pose.

-

Vector3d angularVelocity

Angular velocity vector in SI units (rad/s) and the coordinate system used by pose.

-

Vector3d acceleration

Linear acceleration in SI units (m/s²).

Only available in low-latency mode, otherwise set to zero.

-

Matrix3d positionCovariance

Uncertainty of the current position as a 3x3 covariance matrix

-

Matrix3d velocityCovariance

Uncertainty of velocity as a 3x3 covariance matrix

-

std::vector<Pose> poseTrail

List of poses, where the first element corresponds to current pose and the following (zero or more) values are the recent smoothed historical positions

-

std::vector<FeaturePoint> pointCloud

Point cloud (list of FeaturePoints) that correspond to features currently seen in the primary camera frame.

-

int tag

The input frame tag. This is the value given in addFrame… methods

-

std::shared_ptr<const GnssVioOutput> globalPose

GNSS-VIO output if available, otherwise {} (nullptr).

Do not store this shared pointer into custom data structures for an extended period of tim to avoid exhausting the SDK’s output buffer, which may lead to the SDK terminating with a fatal error.

-

virtual CameraPose getCameraPose(int cameraId) const = 0

-

struct GnssVioOutput

Main output class for global coordinates. Used in both GNSS-VIO and VIO+VPS operating modes.

Public Functions

-

virtual double getCourse() const = 0

Course over ground computed from velocity. Clockwise from North in degrees [0, 360). 0 = North, 90 = East, 180 = South, 270 = West.

This is the direction the device is moving, not direction it’s facing (heading/yaw).

-

virtual double getHeading() const = 0

Heading (Yaw) computed from orientation. Clockwise from North in degrees [0, 360). 0 = North, 90 = East, 180 = South, 270 = West.

-1 if the imuForward vector is not defined in calibration.

This is the direction the device is facing, not direction it’s moving (course).

-

virtual double getPitch() const = 0

Pitch (Tilt) computed from orientation. [-90, 90] -90 = nose pointing down, 0 = level, 90 = nose pointing up.

-1 if the imuForward vector is not defined in calibration.

-

virtual double getRoll() const = 0

Roll (Bank) computed from orientation. [-180, 180]. -180 = upside down, -90 = right wing up, 0 = level, 90 = right wing down.

The imuToOutput matrix in calibration must use IMU to FRD (Front-Right-Down) convention for the value to be correct.

-

virtual CameraPose getEnuCameraPose(int cameraId, WgsCoordinates enuOrigin) const = 0

Get the global pose of a particular camera. The “world” coordinate system of the camera pose is an East-North-Up system, whose origin is at the given WGS84 coordinates.

- Parameters:

cameraId – index of the camera whose pose is returned

enuOrigin – the origin of the ENU coordinate system

-

virtual ~GnssVioOutput()

Public Members

-

WgsCoordinates coordinates

Position in global coordinates in WGS84 (mean)

-

Quaternion orientation

Orientation of the device as quaternion representing local-to-ENU transformation, i.e., a local-to-world where the world coordinates are defined in an East-North-Up coordinate system. Note that the origin of the ENU coordinates system does not matter in this context.

See the getHeading(), getPitch(), getRoll() for obtaining the Euler angles.

-

Vector3d velocity

Velocity in ENU coordinates (m/s)

-

Vector3d angularVelocity

Angular velocity in ENU coordinates

-

Matrix3d enuPositionCovariance

Uncertainty of the estimate as a covariance in an East-North Up (ENU) coordiante system. See the helper methods for obtaining a single vertical and horizontal number.

-

Matrix3d velocityCovariance

Uncertainty of velocity as a 3x3 covariance matrix (in ENU coordinates)

-

GnssVioOutputStatus status

Output status tells what data the global output is mainly based on

-

virtual double getCourse() const = 0

-

using VioOutputPtr = std::shared_ptr<const VioOutput>

Common types

- class spectacularAI.Pose

Represents the pose (position & orientation) of a device at a given time. This typically corresponds the pose of the IMU (configurable). See

CameraPosefor exact poses of the cameras.- asMatrix(self: spectacularAI.Pose) numpy.ndarray

4x4 matrix that converts homogeneous local coordinates to homogeneous world coordinates

- fromMatrix(self: float, arg0: List[List[float[4]][4]]) spectacularAI.Pose

Create a pose from a timestamp and 4x4 local-to-world matrix

- property orientation

Quaternionorientation of the IMU / camera, local-to-world

- property time

floattimestamp in seconds, synchronized with device monotonic time (not host)

- class spectacularAI.Vector3d(*args, **kwargs)

Vector in R^3. Can represent, e.g., velocity, position or angular velocity. Each property is a

float.Overloaded function.

__init__(self: spectacularAI.Vector3d) -> None

__init__(self: spectacularAI.Vector3d, arg0: float, arg1: float, arg2: float) -> None

- property x

- property y

- property z

- class spectacularAI.Vector3f(*args, **kwargs)

Vector in R^3. Single precision.

Overloaded function.

__init__(self: spectacularAI.Vector3f) -> None

__init__(self: spectacularAI.Vector3f, arg0: float, arg1: float, arg2: float) -> None

- property x

- property y

- property z

- class spectacularAI.Quaternion

Quaternion representation of a rotation. Hamilton convention. Each property is a

float.- property w

- property x

- property y

- property z

- class spectacularAI.TrackingStatus(self: spectacularAI.TrackingStatus, value: int)

Members:

INIT

TRACKING

LOST_TRACKING

- INIT = <TrackingStatus.INIT: 0>

- LOST_TRACKING = <TrackingStatus.LOST_TRACKING: 2>

- TRACKING = <TrackingStatus.TRACKING: 1>

- property name

- property value

- class spectacularAI.ColorFormat(self: spectacularAI.ColorFormat, value: int)

Members:

NONE

GRAY

RGB

RGBA

GRAY16

- GRAY = <ColorFormat.GRAY: 1>

- GRAY16 = <ColorFormat.GRAY16: 7>

- NONE = <ColorFormat.NONE: 0>

- RGB = <ColorFormat.RGB: 2>

- RGBA = <ColorFormat.RGBA: 3>

- property name

- property value

- class spectacularAI.Bitmap

Represents a grayscale or RGB bitmap

- getColorFormat(self: spectacularAI.Bitmap) spectacularAI.ColorFormat

- getHeight(self: spectacularAI.Bitmap) int

intbitmap height

- getWidth(self: spectacularAI.Bitmap) int

intbitmap width

- toArray(self: spectacularAI.Bitmap) numpy.ndarray

Returns array representation of the bitmap

- class spectacularAI.Frame

A camera frame with a pose

- property cameraPose

CameraPosecorresponding this camera

- property index

Camera index

- class spectacularAI.WgsCoordinates(self: spectacularAI.WgsCoordinates)

Represents the pose (position & orientation) of a device at a given time.

- property altitude

- property latitude

- property longitude

- class spectacularAI.PixelCoordinates(*args, **kwargs)

Coordinates of an image pixel (x, y), subpixel accuracy (

float).Overloaded function.

__init__(self: spectacularAI.PixelCoordinates) -> None

__init__(self: spectacularAI.PixelCoordinates, arg0: float, arg1: float) -> None

- property x

- property y

- class spectacularAI.FeaturePoint(self: spectacularAI.FeaturePoint)

Sparse 3D feature point observed from a certain camera frame.

- property id

An

intID to identify same points in different frames.

- property pixelCoordinates

PixelCoordinatesof the observation in the camera frame.

Types in #include <spectacularAI/types.hpp>

Defines

-

SPECTACULAR_AI_API

Identifies functions and methods that are a part of the API

-

SPECTACULAR_AI_CORE_API

Identifies functions and methods that are a part of the core API

-

namespace spectacularAI

Typedefs

-

using Matrix3d = std::array<std::array<double, 3>, 3>

A 3x3 matrix, row major (accessed as

m[row][col]). Also note that when the matrix is symmetric (like covariance matrices), there is no difference between row-major and column-major orderings.

-

using Matrix4d = std::array<std::array<double, 4>, 4>

A 4x4 matrix, row major (accessed as

m[row][col]). Typically used with homogeneous coordinates.

Enums

-

enum class TrackingStatus

6-DoF pose tracking status

Values:

-

enumerator INIT

Initial status when tracking starts and is still initializing

-

enumerator TRACKING

VIO is in a good state

-

enumerator LOST_TRACKING

Tracking has failed. Outputs are no longer produced until the system recovers, which will be reported as another tracking state

-

enumerator INIT

-

enum class GnssVioOutputStatus

GNSS-VIO output type

Values:

-

enumerator VIO

The outputs are based on VIO. No recent fixes from VPS or GNSS

-

enumerator GNSS

The output is fused with recent GNSS data

-

enumerator VPS

The output is based on recent VPS-based map matching results

-

enumerator VIO

-

enum class ColorFormat

Specifies the pixel format of a bitmap

Values:

-

enumerator NONE

-

enumerator GRAY

-

enumerator RGB

-

enumerator RGBA

-

enumerator RGBA_EXTERNAL_OES

-

enumerator BGR

-

enumerator BGRA

-

enumerator GRAY16

-

enumerator FLOAT32

-

enumerator ENCODED_H264

-

enumerator YUYV422

-

enumerator Y10P

-

enumerator NONE

-

struct PixelCoordinates

Coordinates of an image pixel, subpixel accuracy

Public Members

-

float x

-

float y

-

float x

-

struct Vector3d

Vector in \( \mathbb R^3 \). Can represent, e.g., velocity, position or angular velocity

Public Members

-

double x

-

double y

-

double z

-

double x

-

struct Vector3f

Vector in \( \mathbb R^3 \) (single precision)

Public Members

-

float x

-

float y

-

float z

-

float x

-

struct Quaternion

Quaternion representation of a rotation. Hamilton convention.

Public Members

-

double x

-

double y

-

double z

-

double w

-

double x

-

struct Pose

Represents the pose (position & orientation) of a device at a given time

Public Functions

-

Matrix4d asMatrix() const

Matrix that converts homogeneous local coordinates to homogeneous world coordinates

Public Members

-

double time

Timestamp in seconds. Monotonically increasing

-

Vector3d position

3D position in a right-handed metric coordinate system where the z-axis points up

-

Quaternion orientation

Orientation quaternion in the same coordinate system as position

-

Matrix4d asMatrix() const

-

struct WgsCoordinates

Global coordinates, WGS-84

Public Members

-

double latitude

Latitude in degrees.

-

double longitude

Longitude in degrees.

-

double altitude

Altitude in meters.

-

double latitude

-

struct FeaturePoint

Sparse 3D feature point observed from a certain camera frame.

Public Members

-

int64_t id

An integer ID to identify same points in different revisions of the point cloud, e.g., the same point observed from a different camera frame.

-

PixelCoordinates pixelCoordinates

Pixel coordinates of the observation in the camera frame. If the pixel coordinates are not available, set to

{ -1, -1 }.The coordinates are not available in case of trigger outputs that do not correspond to any camera frame.

-

Vector3d position

Global position of the feature point

-

int status = 0

Implementation-defined status/type

-

int64_t id

-

struct Bitmap

Simple wrapper for a bitmap in RAM (not GPU RAM).

Public Functions

-

cv::Mat asOpenCV(bool flipColors = true)

Create an OpenCV matrix that may refer to this image as a shallow copy. use .clone() to make a deep copy if needed. If flipColors = true (default) automatically converts from RGB to BGR if necessary.

-

virtual ~Bitmap()

-

virtual int getWidth() const = 0

Image width in pixels

-

virtual int getHeight() const = 0

Image height in pixels

-

virtual ColorFormat getColorFormat() const = 0

Pixel color format / channel configuration

-

virtual const std::uint8_t *getDataReadOnly() const = 0

Data in row major order. Rows must be contiguous. 8-32 bits per pixel, as defined by ColorFormat.

-

virtual std::uint8_t *getDataReadWrite() = 0

Public Static Functions

-

static std::unique_ptr<Bitmap> create(int width, int height, ColorFormat colorFormat)

Create an bitmap with undefined contents

-

static std::unique_ptr<Bitmap> createReference(int width, int height, ColorFormat colorFormat, std::uint8_t *data, int rowStride = 0)

Create bitmap wrapper for plain data (not copied)

-

static std::unique_ptr<Bitmap> createReference(cv::Mat &mat)

Create a bitmap wrapper from an OpenCV matrix

-

cv::Mat asOpenCV(bool flipColors = true)

-

struct CameraRayTo3DMatch

3D ray from camera to 3D point in world coodinates match

-

struct CameraRayToWgsMatch

3D ray from camera to WGS-84 coodinates match

Public Members

-

Vector3d ray

Ray in camera coordinates.

-

WgsCoordinates coordinates

Map point in WGS-84 coordinates.

-

double variance = -1

Uncertainty. The default corresponds to variance of 1.0.

-

Vector3d ray

-

using Matrix3d = std::array<std::array<double, 3>, 3>

Additional utilities in #include <spectacularAI/util.hpp>

-

namespace spectacularAI

Functions

-

double getHorizontalUncertainty(const Matrix3d &covariance)

Get a scalar horizontal uncertainty value in meters from a covariance matrix.

This is an RMS (“1-sigma”) value. In the the general anisotropic case, where the covariance matrix is a rotated version of \( {\rm diag}(s_x^2, s_y^2) \), it is defined as \( \sqrt{s_x^2 + s_y^2} \). This is also equivalent to the Frobenius norm of the 2x2 horizontal part of the covariance matrix. If the horizontal uncertainty is isotropic, this is the same as the 1D standard deviation along the X or Y axis.

-

double getVerticalUncertainty(const Matrix3d &covariance)

Vertical uncertainty (standard deviation / p67) from a covariance matrix

-

Matrix3d buildCovarianceMatrix(double horizontalSigma, double verticalSigma)

Construct a covariance matrix from vertical and horizontal uncertainty components specified as 1-sigma values (i.e., 1D standard deviations). The resulting covariance is isotropic in the XY plane.

-

double getQuaternionYaw(const Quaternion &q)

Yaw angle of a local-to-world quaternion in degrees. The yaw is the rotation around the Z-axis, range is [-180, 180].

-

double getQuaternionPitch(const Quaternion &q)

Pitch angle of a local-to-world quaternion in degrees. Positive pitch corresponds to the nose of the object pointing up, range is [-90, 90]

-

double getQuaternionRoll(const Quaternion &q)

Roll angle of a local-to-world quaternion in degrees. Positive roll corresponds to the right side dipping down, range is [-180, 180]

-

Quaternion eulerToQuaternion(double yawDeg, double pitchDeg, double rollDeg)

Converts local-to-world Euler angles (yaw, pitch, roll) to a quaternion.

-

std::string getSdkVersion()

Returns the Spectacular AI SDK version in the format “MAJOR.MINOR.PATCH”

-

double getHorizontalUncertainty(const Matrix3d &covariance)